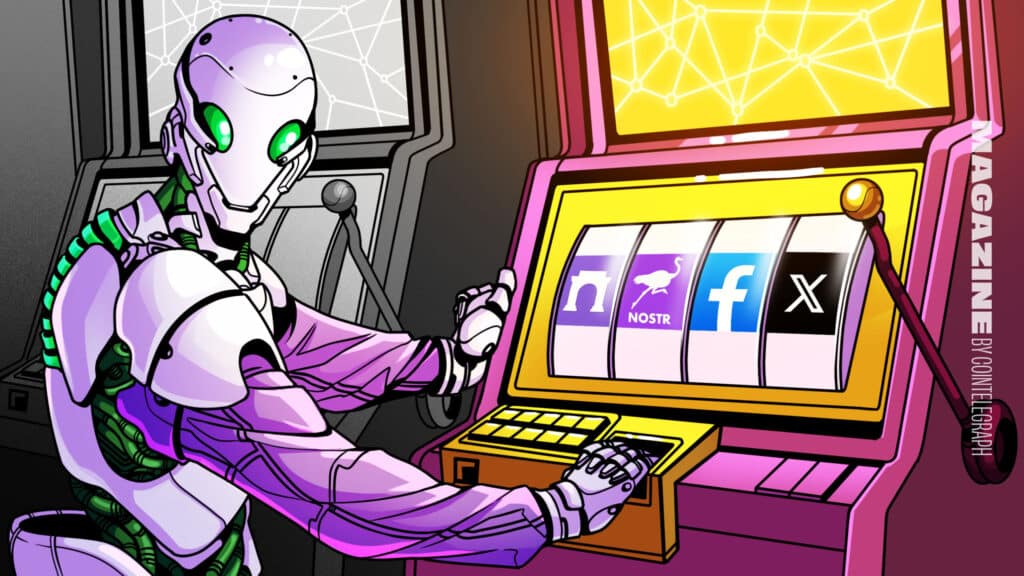

‘Algorithmic choice’ can fix social media – but only on decentralized platforms

3 months ago Benito Santiago

On the one hand, it seems everyone agrees that there are social media delete-culture mobs, espousing ideological purity and peddling conspiracy theories.

X and Facebook have been accused of spreading hate and conflict in the wake of the riots in the United Kingdom, showing how a handful of social media posts can fuel anger and rage.

In response, governments around the world are cracking down on free speech. Turkey banned Instagram, Venezuela banned X and the UK government is sending people to jail for inciting riots – and in some cases, just for having bad opinions.

But there is a better way to fix social media than banning accounts and censoring “misinformation.”

The root cause of the problem isn't fake news in individual posts, it's how social media algorithms prioritize conflict and highlight the most compelling content for engagement and ad dollars.

“This is going to sound a little crazy, but I think the freedom speech debate is a complete distraction right now,” former Twitter boss Jack Dorsey told the Oslo Freedom Forum in June. “I think the real debate should be on free will.”

Table of Contents

ToggleSocial Media Algorithms Marketplace

Dorsey argues that black box social media algorithms are affecting our agency by distorting our reality and hijacking our mental space. He believes the solution is to allow users to choose between different algorithms to have more control over the type of content they present.

“Give people a choice about which algorithm they want to use, from a party they trust, so people can plug into these networks and build their own algorithms so they can see what they want. And you can power them as well. And give people the choice to really have a marketplace.”

It's a simple but compelling idea, but there are serious hurdles to overcome before the mainstream platform gives users a voluntary choice of algorithms.

Why are social media platforms against algorithmic selection?

Princeton computer science professor Arvind Narayanan has extensively researched the impact of social media algorithms on society. Dorsey tells Cointelegraph that while his idea is great, it's unlikely to happen on the big stage.

“The algorithmic marketplace is an important intervention. Centralized social media platforms don't allow users enough control over their feed and the trend is less and less control, as recommendation algorithms play a more central role.”

“I expect that centralized platforms don't allow third-party algorithms for the same reasons they don't provide user controls in the first place. That's why decentralized social media is so important.”

There are some early attempts at decentralized platforms like Farcaster and Nostr, but Twitter spinoff Bluesky is far more advanced and has this functionality already built-in. However, so far it has only been used for special content feeds.

Also read

Features

An Insider's Guide to Real-Life Crypto OGs: Part 1

Features

Peter McCormack's Real Bedford Football Club puts Bitcoin on the map.

Bluesky to test algorithm selection

But William Brady, an assistant professor at Northwestern University, told Cointelegraph that he will be testing a new algorithm on Bluesky in the coming months as an alternative to the site's main algorithm.

Studies show that up to 90% of the political content we see online comes from a small minority of highly motivated and partisan users. “So trying to minimize their impact is one key feature,” he says.

The “representative diversification algorithm” aims to better represent the most common views, not the most common views, without making the feed vanilla.

“We're certainly not avoiding strong moral or political views, because we think that's good for democracy. But we remove the most toxic content that we know is associated with the most extreme people on that stream.

Create a personalized algorithm using AI

Approaching the issue from a different angle, Grok AI researcher and engineer Rick Lammers recently developed an open source browser extension that works on desktop and mobile. It scans and evaluates the people you follow and automatically hides posts based on content and sentiment.

Lammers told Cointelegraph that he created the AI to track people on X about their posts, without reading inflammatory political content.

“I needed something between unfollowing and following all content, which led me to hide posts based on topics related to LLM/AI.”

Using large-scale linguistic models (LLMs) to sort through social media content opens up the incredible opportunity to design personalized algorithms that don't require social platforms to adapt to change.

But reordering content on your feed is a bigger challenge than simply hiding posts, Lammers says, so we're not there yet.

How social media algorithms amplify conflict

When social media started in the early 2000s, the content was displayed chronologically. But in the year In 2011, Facebook began selecting “Top Stories” to show users of its News Feed.

Twitter in 2010 It followed in 2015 with the “Where You Go” feature and in 2016 moved to an algorithmic timeline. The world as we know it has ended.

Although everyone says they hate social media algorithms, they are incredibly useful in helping users navigate through an ocean of content to find the most interesting and engaging posts.

Dan Romero, founder of the decentralized platform Farcaster, pointed this out to Cointelegraph in a thread on the topic. Every world-class consumer app uses machine learning-based feeds because that's what users want.

“this is [the] “The overwhelming consumer has shown preference in terms of wasted time,” he said.

Unfortunately, the algorithms quickly realized that the content that people could engage with included conflict and hate, misrepresentation of political views, conspiracy theories, outrage and public shaming.

“You open your feed and you're bombarded with the same things,” says Dave Katudal, founder of the social networking platform Lively.

“I don't want to be hit by Yemen, Iran, Gaza, Israel and all that. […] They are clearly pushing for some kind of political and disruptive conflict – they want conflict.

Research shows that algorithms consistently emphasize moral, emotional, and group-based content. Brady explains that this is an evolutionary adjustment.

“We have a bias to pay attention to this type of content because in small group settings this really gives us an advantage,” he said. “If you pay attention to the emotional content of your environment, it will help you survive physical and social threats.”

Also read

Features

Peter McCormack's Real Bedford Football Club puts Bitcoin on the map.

Features

5 years of ‘Top 10 Cryptos' testing and lessons learned

Social media bubbles work differently

The old concept of a social media bubble – where users only get content they agree with – is actually incorrect.

Despite the bubbles, research shows that consumers are more exposed to comments and ideas they hate than ever before. That's because they're more likely to engage with content that offends them, either by getting into an argument, freaking out over a quote tweet, or adding to it.

Content you hate is like quicksand – the more you hate it, the more useful the algorithm becomes. But it still reinforces people's belief in and fear of the dark by highlighting the absolute evil that comes from “the other side.”

Like the cigarette companies of the 1970s, platforms are acutely aware of the damage that a focus on engagement can do to individuals and society, but too much money seems at stake to change direction.

Meta generated $38.32 billion in ad revenue last quarter (98% of total revenue), Meta's chief financial officer Susan Lee said, with most of that attributable to AI-based ad placements. Facebook has tried to use “bridging algorithms” that aim to bring people together rather than divide them.

Bluesky, Noster and Farcaster: Algorithms Marketplace

Realizing that Dorsim couldn't bring meaningful change to Twitter, he created Blueskin with the intention of building an open-source, decentralized alternative. But Bluesky, discouraged from making the same mistakes as Twitter, has now thrown its weight behind Bitcoin-friendly Noster.

The decentralized network allows users to choose which clients and relays to use; able to provide Users have a wide selection of algorithms.

But one big issue with decentralized platforms is that building a good algorithm is a huge task that can be beyond the community's capacity.

A group of developers built a decentralized food market for Farcaster last October, but no one seemed interested.

The reason, according to Romero, is that community-developed feeds “are not efficient and economical for modern consumer UX implementations.” As an open source, it can run as a self-hosted client.

“Making a good machine learning feed is hard and requires significant resources to create effectively and real-time,” he said in another thread.

If you want to make a marketplace with good UX, you need to create a backend where developers can upload their models and the client can run the models. [infrastructure]. This obviously has privacy concerns, but it's possible.

The big problem, however, is “TBD if consumers are willing to pay for your algo.”

Subscribe

A very engaging read in Blockchain. It is given once a week.

Andrew Fenton

Based in Melbourne, Andrew Fenton is a journalist and editor covering cryptocurrency and blockchain. He has worked as a film journalist for News Corp Australia, SA Wind and national entertainment writer for Melbourne Weekly.

Follow the author @andrewfenton