Artificial intelligence is finding its way into every aspect of life, including the American legal system. But as the technology becomes more ubiquitous, the issue of AI-generated lies or nonsense — “illusions” — remains.

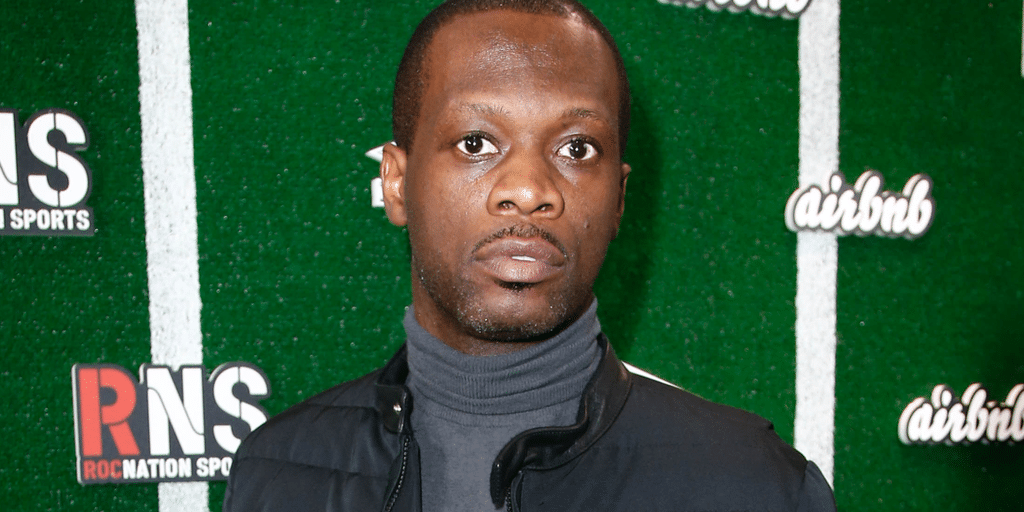

These AI illusions are at the center of claims by former Fugees member Prakazrel “Pras” Michel, who accused the AI model created by EyeLevel of understating a multimillion-dollar fraud case — a claim that EyeLevel co-founder and COO Neil Katz called untrue.

In April, Michel was convicted of 10 counts of conspiracy, tampering with evidence, falsifying documents and acting as an unregistered foreign agent. Michel faces up to 20 years in prison after being convicted of acting as a Chinese agent, prosecutors said.

“We brought in Pras Michel lawyers – to do something different – to do something that has never been done before,” Katz told Decrypt in an interview.

According to an Associated Press report, during closing arguments, Michelle's attorney at the time, defense attorney David Kenner, misquoted a lyric from Sean “Diddy” Combs' “I Miss You” and mischaracterized the song. Fugitives.

As Katz explained, EyeLevel was tasked with building an AI trained on court transcripts that would allow lawyers to ask complex natural language questions about what happened at trial. For example, he said that he does not extract other information from the Internet.

Court proceedings generate a lot of paperwork. The ongoing criminal trial of FTX founder Sam Bankman-Fried has produced hundreds of documents. Separately, the bankruptcy of the collapsed cryptocurrency exchange has more than 3,300 documents—some of which are dozens of pages long.

“This is an absolute game changer for complex litigation,” Kenner wrote in an EyeLevel blog post. “The system has turned hours or days of legal work into seconds. This is to see how cases will be handled in the future.”

On Monday, Michelle's new defense attorney, Peter Zeidenberg, filed a motion for a new trial in the U.S. District Court for the District of Columbia, which was posted online by Reuters.

“Kener used an experimental AI program to write his closing argument, which presented nonsensical arguments, contradicted his plans, and failed to highlight key weaknesses in the government's case,” Zeidenberg wrote. He added that Michael is seeking a new trial because “many errors—many of which were caused by his ineffective trial counsel—undermine confidence in his verdict.”

Katz denied the claims.

“It's not what they say; this group has no knowledge of artificial intelligence or our specific product,” Katz told Decrypt.

Michelle's attorneys have not yet responded to Decrypt's request for comment. Katz denies claims that Kenner has a financial interest in EyeLevel, the company hired to assist Michelle's legal team.

“The allegation that David Kenner and his associates have a secret financial interest in our company is untrue,” Katz told Decrypt. “Kener wrote a very positive review of our software's performance because he felt it was. He wasn't paid for it; he wasn't given stock.”

Launched in 2019, Berkeley-based EyeLevel develops generative AI models for consumers (EyeLevel for CX) and for legal professionals (EyeLevel for Law). As Katz explained, EyeLevel was one of the first developers to work with ChatGPT creator OpenAI, and says the company aims to provide “real AI,” or illusion-free and robust tools, to people and lawyers who don't have access to money. Pay for a large group.

Typically, generative AI models are trained on large data sets collected from various sources, including the Internet. What makes EyeLevel different is that this AI model is trained only on court documents, Katz said.

” of [AI] He was trained only on the transcripts, only on the facts presented in court, only on what was said by both sides and also by the judge,” Katz said. “And when you ask questions of this AI, it gives realistic and illusion-free answers based on what happened.”

No matter how trained an AI model is, experts warn that the program has a tendency to lie or cheat. In April, ChatGPT falsely accused U.S. criminal defense attorney Jonathan Turlin of sexual assault. The chatbot even went so far as to provide a fake link to a Washington Post article to prove its claim.

OpenAI is investing heavily in combating AI nightmares by bringing in third-party red teams to test a suite of AI tools.

“We strive to be as clear as possible when users sign up to use the tool that ChatGPT may not always be accurate,” OpenAI said on its website. However, we recognize that there is much more work to be done to further narrow down the possibilities and educate the public on the current limitations of these AI tools.

Edited by Stacy Elliott and Andrew Hayward.