How the US military will pay off the billion-dollar AI gamble

5 days ago Benito Santiago

War is more profitable than peace, and AI developers are eager to capitalize on future battlefields by providing the US Department of Defense with a variety of creative AI tools.

The latest evidence of this trend was last week when cloud AI developer Anthropoc announced that it would partner with military contractor Palantir and Amazon Web Services (AWS) to provide access to US data and the Pentagon's Claude 3 and 3.5.

Anthropic Cloud says it will provide U.S. defense and intelligence agencies with powerful tools to process and analyze data quickly, allowing the military to conduct rapid operations.

Experts say these partnerships will allow the Defense Department to quickly adopt advanced AI technologies without having to develop them internally.

“Like any technology, the commercial market will always move faster and integrate faster than the government can,” retired US Navy Rear Admiral Chris Baker told Decrypt in an interview. “If you look at how SpaceX went from concept to launch and recovery at sea, the government may still be considering preliminary design reviews in that same period.”

Baker, former commander of the Navy's Information Warfare Systems Command, noted that the integration of advanced technology originally designed for government and military purposes with the public good is nothing new.

“It started as a defense research initiative before the Internet was accessible to the public, which is now the basic hope,” Baker said.

Anthroponic is just the latest AI developer to offer its technology to the US government.

Following the Biden administration's October memo to advance US leadership in AI, ChatGPT developer OpenAI expressed its support for the US and its partners to develop AI aligned with “democratic values”. Recently, Meta announced that it will provide open source Llama AI to the Department of Defense and other US agencies to support national security.

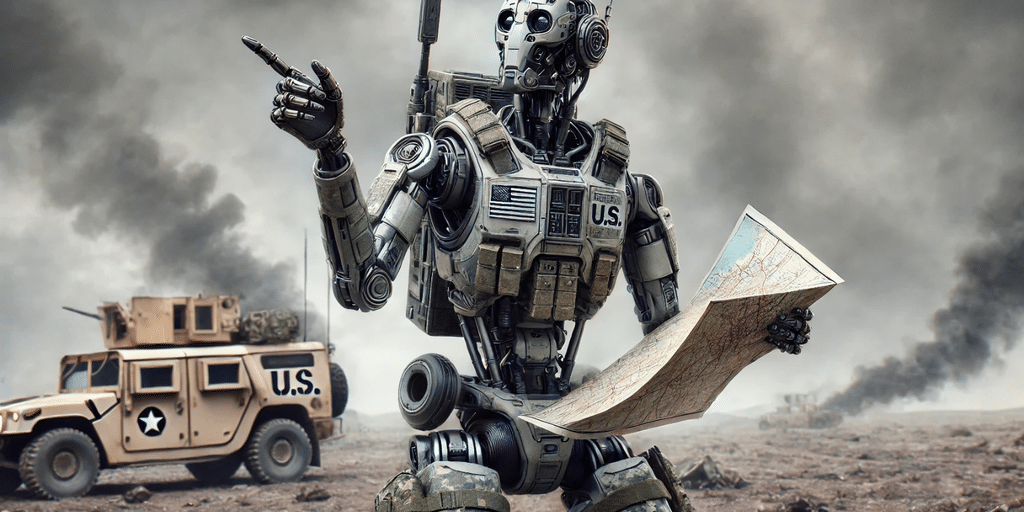

During Axios' Future of Defense event in July, retired Army General Mark Miley said advances in artificial intelligence and robotics will make AI-powered robots a big part of future military operations.

“Ten to fifteen years from now, my guess is a third, maybe 25% to a third of the US military will be robotic,” Milley said.

Anticipating AI's critical role in future conflicts, the DoD's 2025 budget requests $143.2 billion for research, development, testing and evaluation, including $1.8 billion specifically allocated to AI and machine learning projects.

Protecting America and its allies is a priority. Still, AI Squad CEO Dr. Benjamin Harvey pointed out that government partnerships can help AI companies secure stable revenue, solve problems early, and shape future regulations.

“AI developers want to use federal government cases as learning opportunities to understand real-world challenges specific to this sector,” Harvey told Decrypt. “This experience will give them an edge to anticipate issues that may arise in the private sector in the next five to 10 years.

He continued, “It also helps them stay ahead in policy development and regulatory alignment by actively shaping governance, policies and practices.”

Harvey, who previously served as the U.S. National Security Agency's chief of operational data science, also said another reason developers seek deals with government agencies is to establish themselves as important to the government's growing AI needs.

With billions of dollars earmarked for AI and machine learning, the Pentagon is investing heavily in boosting America's military capabilities, aiming to harness the rapid development of AI technologies to its advantage.

While the public may imagine AI's role in the military as autonomous robots walking the battlefields of the future, the reality is far more dramatic and data-driven, experts say.

“In the military context, we're mostly seeing very advanced autonomy and primitive machine learning elements, where the machines help with decision-making, but that doesn't typically involve decisions to release weapons,” Kratos Unmanned Systems Division President Steve Finley told Decrypt. AI greatly accelerates data collection and analysis to form decisions and conclusions.

In the year Founded in 1994, San Diego-based Kratos Defense has extensive partnerships with the US military, particularly the Air Force and Navy, to develop advanced unmanned systems such as the Valkyrie fighter jet. According to Finley, keeping people in decision-making is critical to preventing the dreaded “terminator” scenario from occurring.

“If a weapon is involved or a human life is at risk, the human decision-maker is always in the mix,” Finley said. “There is always a defense to any discharge of arms or decisive action—”cease” or “seize.”

Despite how far AI has come a generation since ChatGPT's launch, experts, including author and scientist Gary Markus, say the limitations of current AI models cast doubt on the technology's true effectiveness.

“Businesses have discovered that large language models are not particularly reliable,” Marcus told Decrypt. “They develop, make bone-directing errors, and this limits their actual applicability. You don't want something that looks like you're plotting your military strategy.

Known for criticizing exaggerated AI claims, Marcus is a cognitive scientist, AI researcher, and author of six books on artificial intelligence. In reference to the terrifying “Terminator” scenario and echoing Kratos' defense executive, Marcus emphasized that fully autonomous robots powered by AI would be flawed.

“It's stupid to link them to war in a loop where people don't exist, especially considering their obvious lack of reliability right now,” Marcus said. “I am concerned that many people are deceived by such AI systems and do not understand the reality of their reliability.”

As Marcus explains, many in the AI field believe that feeding AI systems more data and computing power will continually increase their capabilities.

“Over the last few weeks, there have been rumors from many companies that so-called drag rules have ended, and returns are getting shorter,” Marcus added. “So I don't think the military should expect that all these problems are going to be solved. These systems can be unreliable, and you don't want to use unreliable systems in combat.

Edited by Josh Quittner and Sebastian Sinclair

Generally intelligent newspaper

A weekly AI journey narrated by General AI Model.