We do the research, you get the alpha!

Get exclusive reports and key insights on airports, NFTs and more! Sign up for Alpha Reports now and step up your game!

Go to Alpha Reports

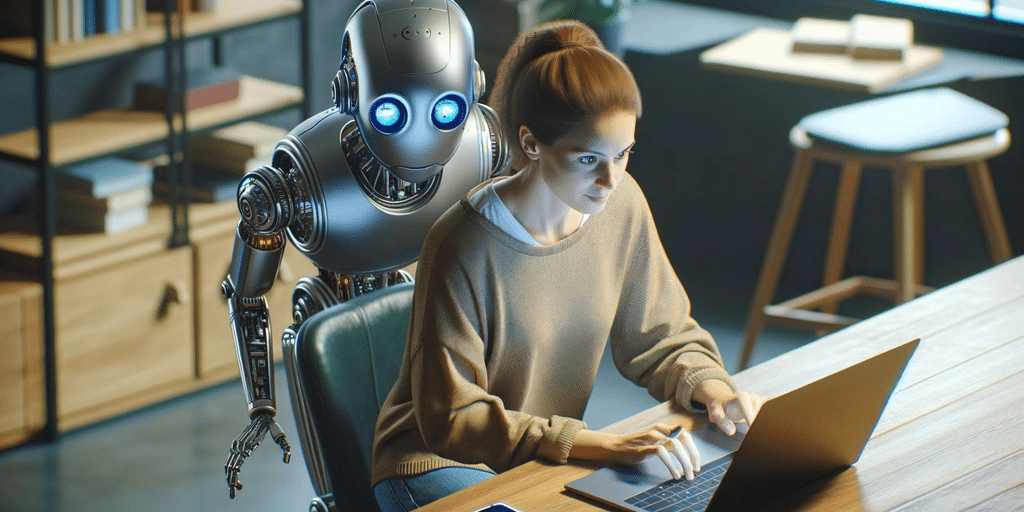

When Microsoft announced its new Notes feature at its annual developer conference on Monday, the news made waves beyond the AI industry. Cybersecurity and privacy experts have noticed, too — and they're concerned.

At the event, Microsoft said that new Copilot-enabled computers will remember and later be able to access anything that appears in AI-indexed snapshots stored on the device, including emails, websites and apps. Reaction to the announcement has been mixed, with some security experts calling the feature a natural target for spyware and cybercriminals.

“This is a company that wants to record literally everything you do on your computer,” Geometric Intelligence founder and CEO Gary Markus tweeted. “If you don't think Microsoft Recall, local or not, will be one of the biggest cyber targets in history, you're not paying attention.”

“I'm so glad Microsoft is here, helping me remember why I don't use Windows, and when I do, I disable the ‘smart' feature they added,” said Emily Young, writer for Linus Tech Tips. .

“In my day we called this spyware,” writes software engineer and crypto researcher and critic Molly White.

With Recall, everything on the screen is captured—including the password, unless the app or form hides it automatically.

Although it's a simple premise, implementing Recall requires a lot of care, cyber security expert Caitlin Bowden told Decrypt.

“Note appears to work like existing search engines, with a broader scope,” said Bowden, adding that Note only works on PCs with certain hardware configurations. You have browsing history and an index of file contents on your computer, and this is practically no different. And unlike most files, Microsoft says Recall data is encrypted.

“If Microsoft starts offloading the training set or collecting data for use in recommendation engines or off-machine models, user privacy could be compromised,” she said.

Bowden is a member of the hacker collective known as the Dead Cow Cult and also serves as the chief marketing officer of the open-source privacy-focused Velid project. She said more clarity is needed on how AI tools are used.

“I always feel more comfortable when companies that make AI products are transparent about what data sets were used to train the software,” Bowden said. “A lack of transparency from Microsoft as far as I'm concerned. If people don't know what data was used to train the model, they shouldn't apply it.

With OpenAI, Google and Microsoft are pushing hard to bring generative AI products to market, several projects and groups are offering decentralized and open source alternatives, including Venice AI, FLlock, PolkaBot AI and the Superintelligence Alliance.

As Ethereum founder Vitalik Buterin wrote earlier today, open source AI is the best way to avoid futures where “most of human thought is read and controlled by a few central servers.”

Eric Voorhees, founder and CEO of Venice AI, previously told Decrypt that “people should assume that everything they write to OpenAI will go to them and they will have it forever.” “The only way to solve this is by using a service where the data doesn't go to a central repository in the first place.”

Edited by Ryan Ozawa.

Generally intelligent newspaper

A weekly AI journey narrated by General AI Model.