Say goodbye to typing and hello to thinking. At the WebSummit conference, neural technology company Unbabel gave a fascinating live demonstration of Project Halo, which aims to enable quiet, thought-based communication between humans and machines.

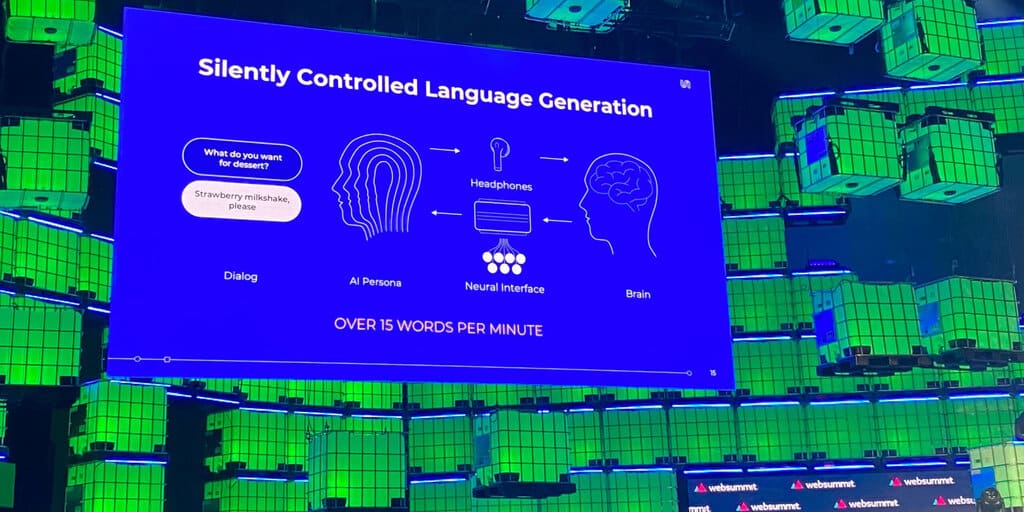

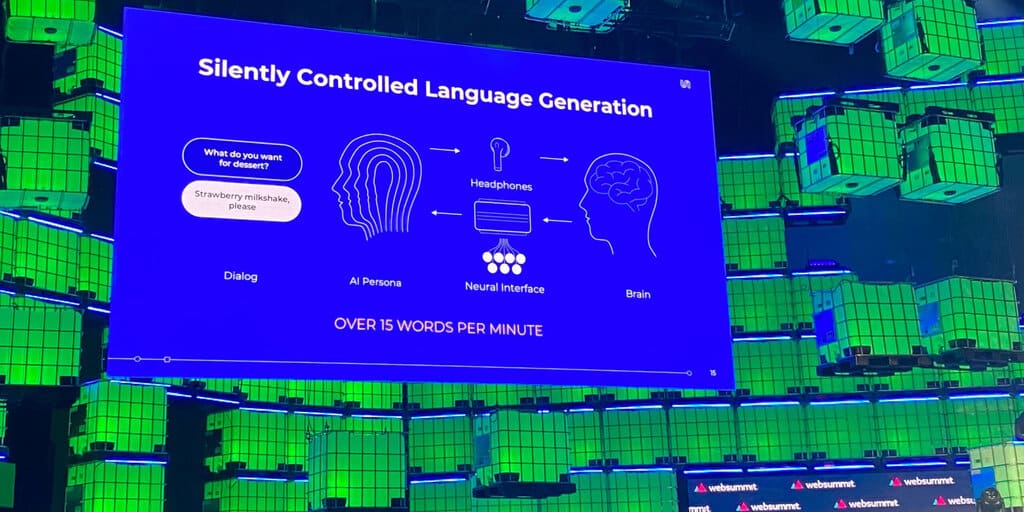

Project Halo combines a non-invasive neural interface with generative AI to transform bioelectrical signals into language.

“There is a universal language that happens in our minds,” said Unbabel CEO Vasco Pedro. “What I mean by this is that when you look at fMRI images of people who speak different languages but think about the same thing, they activate essentially the same parts of the brain.”

Pedro demonstrated how Project Halo allows users to receive messages narrated through headphones and then send responses in complete silence, thinking about what they want to say.

Providing a way to respond to messages that don't require speaking and typing has many potential cases, Pedro says, from routine situations such as responding to texts while in a dark movie theater to more life-changing situations: people with amyotrophic lateral sclerosis. Multiple Sclerosis (ALS) Ability to communicate even through written or voice notes.

By training the text-to-speech model with voice, you can also speak.

Pedro showed an interesting example of how Project Halo enabled an ALS patient to silently communicate his lunch order. The system decoded the desired response from the neural signals, then digitized the original recorded voice before losing the ability to speak the written response.

Unbabel's isn't the first mind-reading AI. As previously reported by Decrypt, Meta has developed a system that can scan brain activity and visualize images in the human mind. AI achieved this feat by taking magnetic resonance measurements as participants looked at pictures and reconstructed the original images.

Moreover, Neuralink, a major player in the field, is working on advanced neural networks and has been approved to begin testing brain implants on humans.

Project Halo's key differentiator, however, is its ability to read minds and generate natural language responses. As Pedro points out, this requires developing a language model that can craft messages that accurately reflect the user's personal context, relationships, preferences, and more.

It's not mind-reading technology out of control: users just need to input the answer in order for the device to respond.

“Basically, what's happening is, I'm reading the question through the AirPods, and I'm using the neural interface here in my hand—this is EMG,” Pedro explained. “It's mapping the biosignals, and what's happening is it's trying to generate the answer that I want to give to a big language model that knows a lot about me.”

The model runs about 15 words per minute, which is a huge improvement over previous methods that can be twice as slow.

Pedro said Project Halo is still in its early stages, but the company expects to launch it commercially in 2024. For all people, regardless of physical abilities, Halo enables seamless connectivity.

“Our goal is to make AI good and enable everyone to communicate in any language, removing language barriers as a barrier,” Pedro said.

Edited by Ryan Ozawa.

Stay on top of crypto news, get daily updates in your inbox.