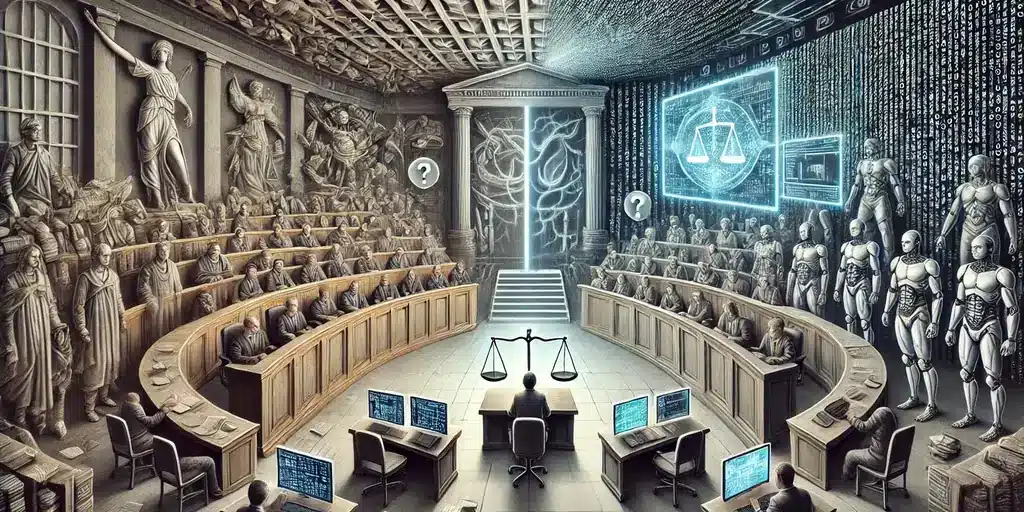

A new study has revealed an unexpected twist in the human vs. machine debate: People prefer AI decisions over humans—at least when it comes to making tough calls on financial matters. But before you hand over the finance key, some serious red flags are waving.

Published on Tuesday, scientists Marina Chugunova and Wolfgang J. found that more than 60% of participants would choose AI decision-makers to redistribute income.

“The experiment created a fair algorithm and a choice between people based on principles of fairness held by hundreds of people. [decision maker] Asked to make a fair decision, the study found that “when there is no information on group membership, the algorithm is selected in 63.25% of all choices.”

This continued even when the possibility of bias arose.

But here's the truth: While people like AI to call the shots, they rate human decisions better.

“People are less satisfied with algorithmic decisions and find them less fair than human decisions,” the study says.

The organization of the study was clever. The participants chose between human and AI decision makers to compensate for income from luck, effort and talent-based activities. Even when members of each group are revealed, people still lean towards AI, which can open the door to discrimination.

“Many companies are using AI for hiring decisions and compensation planning, and public bodies are employing AI in policing and enforcement strategies,” Luhan said in a press release. “Our findings suggest that when algorithmic consistency is improved, the public may support algorithmic decision-makers, even in terms of moral importance.”

This is not just an academic thirst. As AI creeps into more areas of life—think anything from human resources to dating—how we see equity will make or break public support for AI-driven policies.

AI's track record on equity, however, is spotty – to put it mildly.

Recent research and studies have shown persistent bias issues in AI systems. In the year In 2022, the UK's Information Commissioner's Office launched an investigation into cases of AI-based discrimination, and researchers found that the most popular LLMs have clear political bias. Grok from Elon Musk's x.AI is specifically instructed not to give “enabled” answers.

Worse, researchers from the universities of Oxford, Stanford and Chicago found that AI models were more likely to recommend the death penalty against African-American English-speaking defendants.

Job application AI? Researchers caught AI models throwing out black-sounding names and favoring Asian women. “Resumes with names other than black Americans were less likely to be a top candidate for a financial analyst role,” according to Bloomberg Technology.

Cass R. Sunstein's work on what he describes as AI-powered “voting engines” paints a similar picture, suggesting that while AI may enhance decision-making, it may also amplify or take advantage of existing biases. “Paternity or not, AI can suffer from its own behavioral biases. There is evidence that LLMs exhibit some of the biases that humans do.

However, some researchers such as Bo Cowgill and Catherine Tucker argue that AI is seen as neutral for all parties, which reinforces the image of a fair player when making decisions. “Algorithmic bias may be easier to measure and address than human bias,” he said in a 2020 research paper.

In other words, once engaged, the AI appears to have more control and logic—and is easier to correct if it strays from its intended purpose. This idea of completely bypassing the middleman was key to the philosophy behind smart contracts: contracts that operate autonomously without the need for judges, consumers, or human reviewers.

Some accelerators see AI governments as a compelling path to equity and efficiency in global society. However, this could lead to a new form of “deep state”—one controlled by shadowy oligarchs, but controlled by the architects and trainers of these AI systems.

Edited by Ryan Ozawa.

Generally intelligent newspaper

A weekly AI journey narrated by a generative AI model.