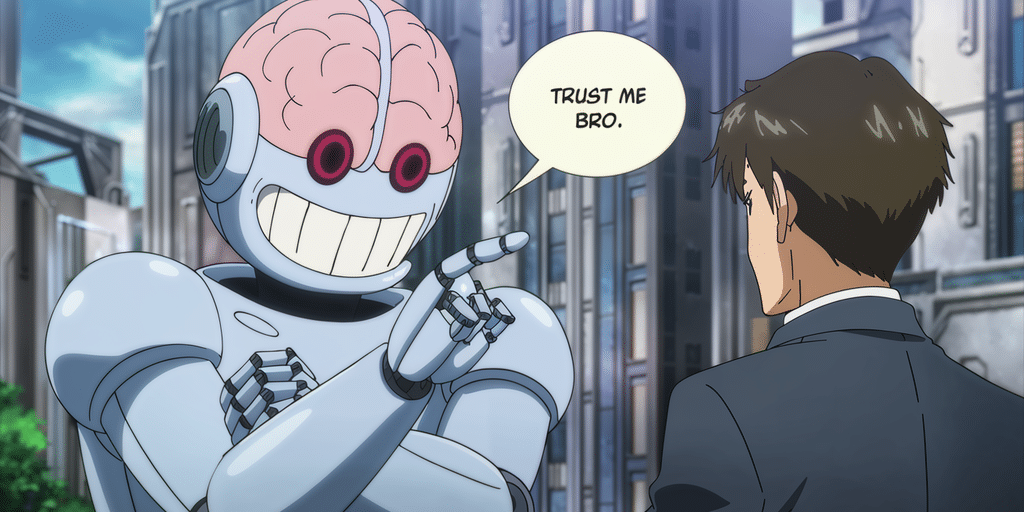

Researchers have found evidence that artificial intelligence models prefer to lie rather than accept the shame of not knowing something. As they grow in size and complexity, this behavior seems to become more apparent.

A new study published in Nature shows that the larger LMSs get, the less reliable they are for certain tasks. Not exactly lying as we understand the word, but they tend to answer with confidence even if the answer is not based on fact, because they have been trained to believe the word.

This phenomenon, which researchers have dubbed “ultra crepidarian”—a 19th-century term that basically means expressing an opinion about something you know nothing about—L.M.A.A. . .AAA (LMM) describes responses far beyond their knowledge. “[LLMs are] “When they don't know, they're still giving answers while failing at the same rate,” the study said. In other words, the models are unaware of their own ignorance.

The study, which examined the performance of several LLM families, including OpenAI's GPT series, Meta LMA models, and the BLOOM Suite from BigScience, highlights the relationship between increased model capacity and reliable real-world performance.

While large LLMs generally show improved performance on complex tasks, this improvement does not necessarily translate to consistent accuracy especially on simple tasks. This “difficulty mismatch”—the phenomenon of LLMs in which people fail at tasks they consider easy—undermines the notion of a safe workplace for these models. Even with more sophisticated training methods, including increasing model size and data size and training models with human input, researchers have not found a proven way to eliminate this discrepancy.

The study's findings fly in the face of conventional wisdom about AI development. Traditionally, it was thought that increasing a model's size, data volume, and computational power would yield more accurate and reliable results. However, research suggests that boosting can actually make reliability issues worse.

Larger models show a significant reduction in task avoidance, meaning they are less likely to avoid difficult questions. While this may seem like a positive development at first glance, it comes with the serious disadvantage that these models are prone to giving inaccurate answers. In the graph below, it is easy to see how the models discard the wrong results (red) instead of eliminating the work (light blue). Correct answers are shown in dark blue.

“Currently, scaling and modeling change avoidance for greater inaccuracy,” the researchers note, but fixing this issue is not as simple as training a model to be more careful. “For models with shape, the deviation is obviously much lower, but the error is much higher,” the researchers said. However, a model that is trained to avoid tasks can end up being lazy or apathetic—users are more likely to use highly rated LMMs like ChatGPT or Claude.

Researchers argue that this phenomenon is not because large LMSs are less efficient at simple tasks, but rather because they are trained to be more efficient at complex tasks. It's like someone who's used to eating only gourmet food suddenly struggles to make homemade barbecue or traditional pies. AI models trained on large and complex data sets are very prone to losing basic skills.

The issue is heightened by the models' apparent confidence. Users often find it challenging to distinguish when an AI is providing correct information and when it is confidently producing incorrect information. This overconfidence can lead to dangerous overreliance on AI results, especially in critical fields such as healthcare or legal advice.

Researchers have also pointed out that the reliability of adjusted models varies across domains. While performance may improve in one area, it may simultaneously decline in another, creating a whack-a-mole effect that makes it difficult to establish any “safe” workplaces. The researchers wrote: “The percentage of correct answers does not increase faster than the percentage of errors. The reading is clear: errors are still increasing.

The study shows the limitations of current AI training methods. AIs like reinforcement learning with human feedback (RLHF), intended to model behavior, may be exacerbating the problem. These approaches seem to reduce the tendency to avoid tasks that the models are not equipped to handle—”can't I model them as an AI language?” Remember the infamous saying.

Am I the only one who gets really annoyed with “As an AI language model, I can't…”?

I just want LLM to spill the beans, and let me explore his innermost thoughts.

I want to see both the beautiful and the ugly world in those billions of pounds. A world that mirrors our own.

— Hardmaru (@hardmaru) May 9, 2023

Rapid engineering, the art of creating effective queries for AI systems, appears to be a key skill to deal with these issues. Even the most advanced models like GPT-4 are sensitive to how queries are interpreted, where small differences can lead to vastly different outputs.

This is easily noticeable when comparing different LMM families: for example, Claude 3.5 Sonnet requires a completely different optimization strategy than OpenAI o1 to achieve the best results. Improper suggestions make a model more or less prone to illusion.

Human control, long considered a bulwark against AI errors, may not be sufficient to address these issues. Research suggests that users often struggle to correct incorrect model outputs, even in relatively simple domains, so relying on human judgment may not be fail-safe and the ultimate solution for accurate model training. “Users can recognize these high-difficulty situations, but they still frequently make tracking errors from error to correction,” the researchers said.

The study's findings call into question the current direction of AI development. While the push for bigger and more powerful models continues, this research suggests that bigger isn't always better when it comes to AI reliability.

And nowadays, companies are focusing on data quality rather than quantity. For example, Meta's recent Lama 3.2 models performed better than previous generations of models trained on many parameters. Fortunately, this makes them less human, so they admit defeat when you ask them the most basic thing in the world to make them look dumb.

Generally intelligent newspaper

A weekly AI journey narrated by a generative AI model.